台科大 電腦圖學實驗室

姚智原 Chih-Yuan Yao

Computer Science and Information Engineering

電腦遊戲動畫圖學實驗室

+886-2-2737693

cyuan.yao@gmail.com

Curriculum Vitae

Computer graphics, including mesh processing and modeling, and non-photorealistic rendering (NPR).

Publication

Arbitrary Screen-Aware Manga Reading Framework with Parameter-Optimized Panel Extraction

While reading Manga on smart devices, it is inconvenient to drag and zoom through contents. Therefore, this work proposes a user-friendly and intuitive Manga reading framework for arbitrary reading conditions. Our framework creates a reading flow by breaking pages into contextual panels, organizing panels as display units, grouping units as a display list according to reading conditions, and playing the list with designed eye-movement-simulated transitions. The core is parameter-optimized panel extraction, which uses the occupied area of extracted panels and the ratio of borders parallel to each other to automate parameter selection for matching up identified panel corners and borders. While applying to 70 Manga chapters, our extraction performs comparatively better against two state-of-the-art algorithms and successfully locates “break-outs.” Furthermore, a usability test on three chapters demonstrates that our framework is effective and visually pleasing for arbitrary Manga-reading scenarios while preserving contextual ideas through panel arrangement.

Image Vectorization with Real-Time Thin-Plate Spline

Vector graphics becomes popular due to its compactness and scalability. However, current representations generally fall short in real-time editability and require intensive labor to create. To solve these problems, this work patchizes detailed features for localized and parallelized Thin-Plate Spline (TPS) interpolation in order to maintain interactive editing and manipulation abilities and to preserve complex image details. A user provides a patch labelling map to create explicit parametric patches for editability, and our system extracts an optimal set of curvilinear and transition features using the image’s intensity gradient distribution histogram for compact-ness and scalability. We optimally cluster extracted features into parameterized patches to parallelize TPS interpolation for real-time TPS kernel construction, inversion, and rasterization. Our real-time vector representation enables us to build an interactive system for detail-maintained image magnification, shape and color editing, abstraction and stylization, and material replacement of cross mapping. Experiments show that our proposed system can provide real-time editability while preserving structural and textural information better than raster-space operations and produce high-quality results comparable to existing state-of-the-art vector-based representations.

Micrography QR Codes

This paper presents a novel algorithm to generate micrography QR codes, a novel machine-readable graphic generated by embedding a QR code within a micrography image. The unique structure of micrography makes it incompatible with existing methods used to combine QR codes with natural or halftone images. We exploited the high-frequency nature of micrography in the design of a novel deformation model that enables the skillful warping of individual letters and adjustment of font weights to enable the embedding of a QR code within a micrography. The entire process is supervised by a set of visual quality metrics tailored specifically for micrography, in conjunction with a novel QR code quality measure aimed at striking a balance between visual fidelity and decoding robustness. The proposed QR code quality measure is based on probabilistic models learned from decoding experiments using popular decoders with synthetic QR codes to capture the various forms of distortion that result from image embedding. Experiment results demonstrate the efficacy of the proposed method in generating micrography QR codes of high quality from a wide variety of inputs. The ability to embed QR codes with multiple scales makes it possible to produce a wide range of diverse designs. Experiments and user studies were conducted to evaluate the proposed method from a qualitative as well as quantitative perspective.

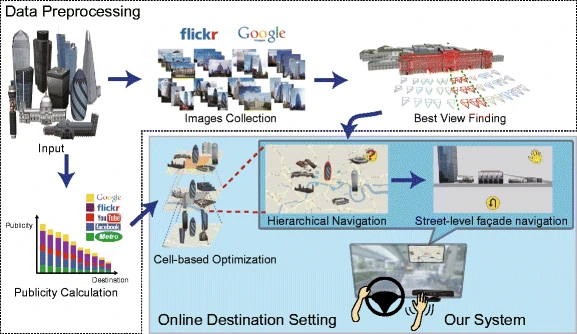

Destination selection based on consensus-selected landmarks

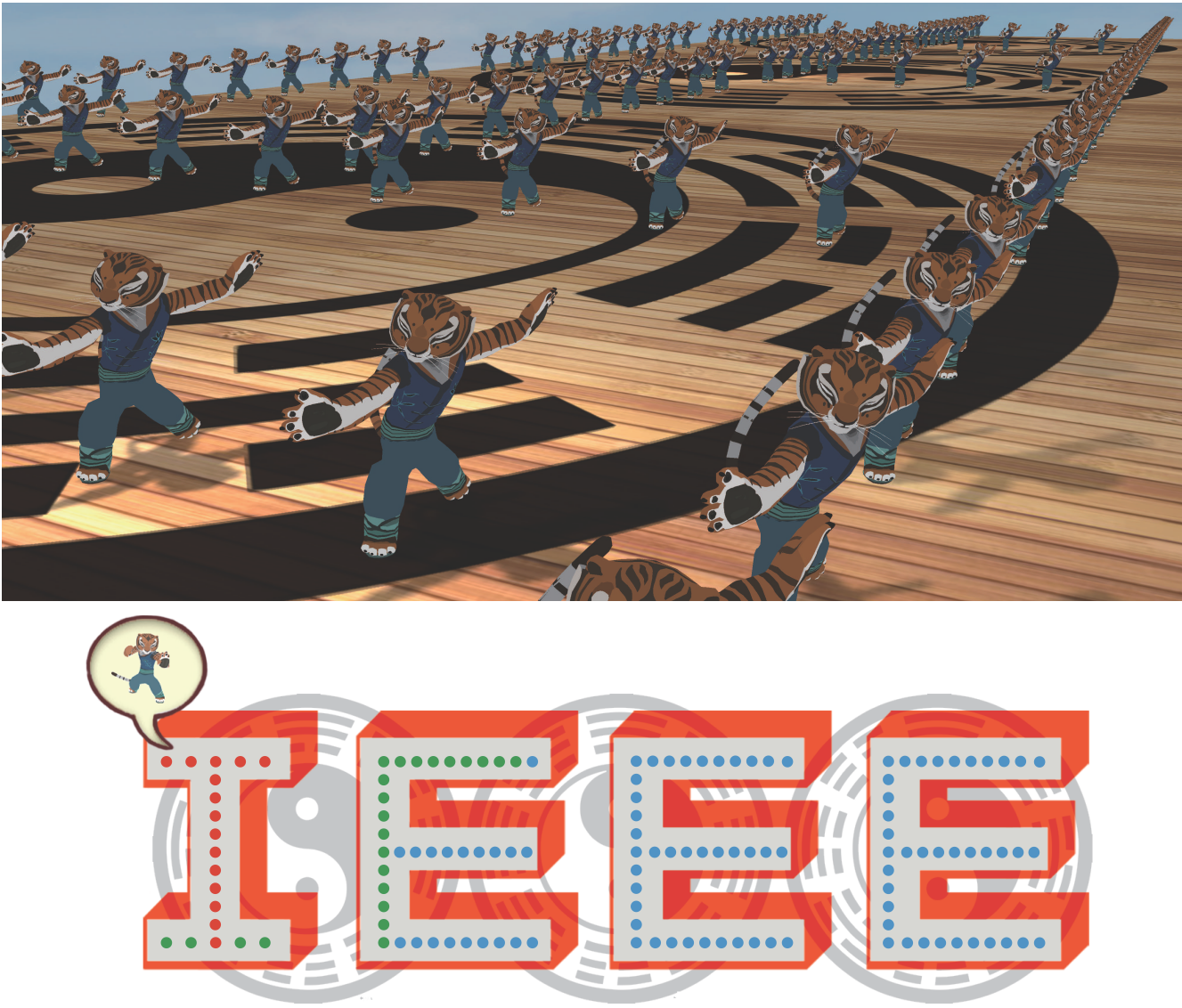

Data-Driven NPR Illustrations of Natural Flows in Oriental Painting

The strokes of rivers and falls are important elements in oriental paintings to express surface sprays, smooth water lines, and coherent diffusion. However, it is not trivial for general users to draw water flows with artistic styles even with a commercial software to generate oriental painting animations. Aiming at this point, this study designs a data-driven system to extract arbitrary painting styles from an existing oriental painting, animate these stylizing strokes, and transfer the styles to other paintings. Our system extracts an initial flow pattern by analyzing the structure, placement density, and ink density of strokes and automatically computes a flow field according to water boundaries and flow obstacles. The flow field is generated by solving the Naiver-Stokes equations in real time and its painting style is also extracted as patterns of strokes with their location, oscillation style, brush pattern, and ink density. Finally, the extracted strokes are dynamically generated and animated with the constructed field with controllable smoothness and temporal coherence. Furthermore, our system can transfer the extracted painting styles to animate the water flow of another oriental paintings. The overall flow animation is pleasant and delivers the spirit of the existing painting without flickering artifacts commonly existing in a stroke-based non-hotorealistic rendering (NPR) animation.

Resolution Independent Real-Time Vector Embedded Mesh for Animation

High resolution textures are determinant for high rendering quality in gaming and movie industries, but burdens in memory usage, data transmission bandwidth, and rendering efficiency. Therefore, it is desirable to shade 3D objects with vector images such as Scalable Vector Graphics (SVG) for compactness and resolution independence. However, complicated geometry structure and high rendering cost limit the rendering effectiveness and efficiency of vector texturing techniques. In order to overcome these limitations, this work proposes a real-time resolution independent vector-embedded shading method for 3D animated objects. Our system first decomposes a vector image consisting of layered close coloring regions into unifying-coloring units for mesh retriangulation and 1-D coloring texture construction where where coloring denotes color determination for a point based on an intermediate medium such as a raster/vector image, unifying denotes the usage of the same set of operations, and unifying-coloring denotes coloring with the same color computation operations. We then embed the coloring information and distances to enclosed unit boundaries into retriangulated vertices to minimize embedded information, localize vertex-embedded shading data, remove overdrawing inefficiency, and ensure fixed-length shading instructions for data compactness and avoidance of indirect memory accessing and complex programming structures when using other shading and texturing schemes. Furthermore, stroking is the process of laying down a fixed-width pen-centered element along connected curves, and our system also decomposes these curves into segments using their curve-mesh intersections and embeds their control vertices as well as their widths into the intersected triangles to avoid expensive distance computation. Overall speaking, our algorithm enables high-quality real-time GPU-based coloring for real-time 3D animation rendering through our efficient SVG-embedded rendering pipeline while using a small amount of texture memory and transmission bandwidth.

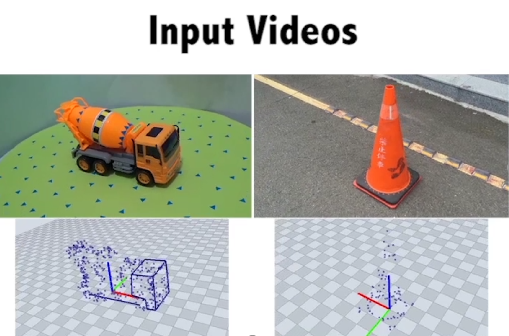

EZCam: WYSWYG Camera Manipulator for Path Design

With advance in movie industry, composite interactions and complex visual effects require to shoot at the designed part of a scene for immersion. Traditionally, the director of photography (DP) plans a camera path by recursively reviewing and commenting rendered results with it. Since the adjust-render-review process is not immediate and interactive, mis-communications happen to make the process ineffective and time consuming. Therefore, this work proposes a What-You-See-What-You-Get camera path reviewing system for the director to interactively instruct and design camera paths. Our system consists of a camera handle, a parameter control board, and a camera tracking box with mutually perpendicular marker planes. When manipulating the handle, the attached camera captures markers on visible planes with selected parameters to adjust the rendering view of the world. The director can directly examine results to give immediate comments and feedbacks for transformation and parameter adjustment in order to achieve effective communication and reduce the reviewing time. Finally, we conduct a set of qualitative and quantitative evaluations to show that our system is robust and efficient and can provide means to give interactive and immediate instructions for effective communication and efficiency enhancement during path design.

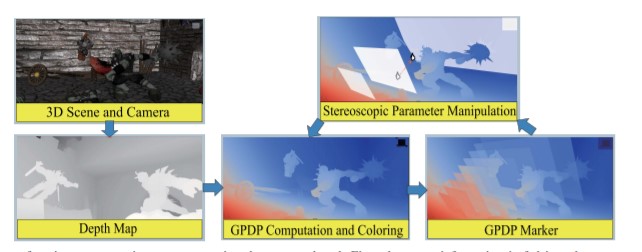

A Tool for Stereoscopic Parameter Setting Based on Geometric Perceived Depth Percentage

This study designs geometrical perceived depth percentage (GPDP) to numerate and shade the geometric depth of a scene for a practical solution to visualize the stereoscopic perception without the need of any 3D device or special environment. In addition to the geometric relationship between object depth and focal distance, GPDP further considers the screen width and viewing distance for a more intuitive control metric of stereoscopy when comparing to parallax and disparity. Along with the GPDP-based shading scheme, a stereoscopic comfort volume and depth perception markers can also be defined to form an intuitive auxiliary tool for the manipulation of stereoscopic parameters. These tools are easily implemented into any modern rendering pipeline, including interactive Autodesk Maya and off-line Pixar's RenderMan renderer.

Manga Vectorization and Manipulation with Procedural Simple Screentone

Manga are a popular artistic form around the world, and artists use simple line drawing and screentone to create all kinds of interesting productions. Vectorization is helpful to digitally reproduce these elements for proper content and intention delivery on electronic devices. Therefore, this study aims at transforming scanned Manga to a vector representation for interactive manipulation and real-time rendering with arbitrary resolution. Our system first decomposes the patch into rough Manga elements including possible borders and shading regions using adaptive binarization and screentone detector. We classify detected screentone into simple and complex patterns: our system extracts simple screentone properties for refining screentone borders, estimating lighting, compensating missing strokes inside screentone regions, and later resolution independently rendering with our procedural shaders. Our system treats the others as complex screentone areas and vectorizes them with our proposed line tracer which aims at locating boundaries of all shading regions and polishing all shading borders with the curve-based Gaussian refiner. A user can lay down simple scribbles to cluster Manga elements intuitively for the formation of semantic components, and our system vectorizes these components into shading meshes along with embedded Bezier curves as a unified foundation for consistent manipulation including pattern manipulation, deformation, and lighting addition. Our system can real-time and resolution independently render the shading regions with our procedural shaders and drawing borders with the curve-based shader. For Manga manipulation, the proposed vector representation can be not only magnified without artifacts but also deformed easily to generate interesting results.

Extra detail addition based on existing texture for animated news production

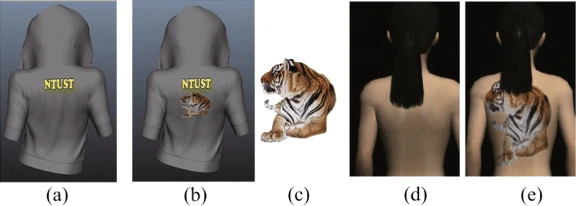

Animated news proposed by Next Media Animation becomes more and more popular because an animation is adapted to tell the story in a piece of news which may miss visual and audial circumstances. In order to fulfill the requirement of creating a 90-second animation within 2 h, artists must quickly set up required news elements by selecting existing 3D objects from their graphics asset database and adding distinguished details such as tattoos, scars, and textural patterns onto selected objects. Therefore, the detail addition process is necessary and must be easy to use, efficient, and robust without any modification to the production pipeline and without addition of extra rendering pass to the rendering pipeline. This work aims at stitching extra details onto existing textures with a cube-based interface using the well-designed texture coordinates. A texture cube of the detail texture is first created for artists to manipulate and adjust stitching properties. Then, the corresponding transformation between the original and detail textures is automatically computed to decompose the. detail texture into triangular patches for composition with the original texture. Finally, a complete detail-added object texture is created for shading. The designed algorithm has been integrated into the Next Media Animation pipeline to accelerate animation production and the results are satisfactory.

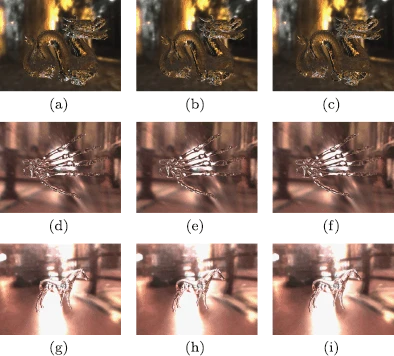

Geometry-Shader-Based Real-time Voxelization and Applications

This work proposes a new real-time voxelization algorithm based on newly available GPU functionalities and designs several applications to render complex lighting effects with the proposed voxelization method. The voxelization algorithm can efficiently transform a highly complex scene in surface representation into a set of voxels in one GPU pass using the geometry shader. The surficial and volumetric properties of objects such as opaqueness, refraction and transmission are directly recorded with newly available 3D textures. The usage of 3D textures can remove those strenuous efforts required to modify the encoding and decoding scheme when adjusting the voxel resolution. In the first, surficial and volumetric properties recorded in 3D textures can be used to interactively compute and render more realistic lighting effects including the shadow of objects with complex occlusion and the refraction and transmission of transparent objects. The shadow can be rendered with an absorption coefficient which is computed according to the number of surfaces drawing in each voxel during voxelization and used to compute the amount of light passing through partially occluded complex objects. Second, the surface normal, transmission coefficient and refraction index recorded in each voxel can be used to simulate the refraction and transmission lighting effects of transparent objects using our multiple-surfaced refraction algorithm. Finally, the results demonstrate that our algorithm can transform a dynamic scene into the volumetric representation and render complex lighting effects in real time without any preprocessing.Related Project

十三行博物館 VR水下探險

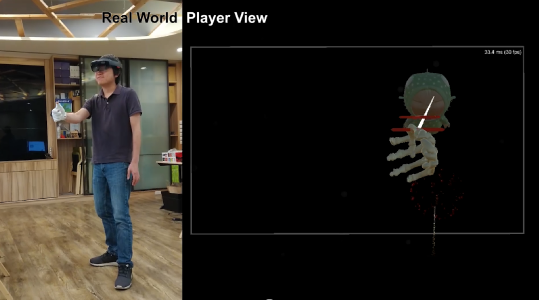

ADV Simulator using Augmented

次世代VR科技-虛實整合

Smart Glove & MR

VR Dentist Training

Smart Glove

鈊象電子 OverTake VR

文資局 走入布袋戲

鈊象電子 VR釣魚

擴增實境應用(AR Application)

國立臺灣美術館 AR藝術明信片

國立臺灣歷史博物館 台灣拍拍

Tango盲人導覽

淡水古蹟博物館 滬尾砲台復砲計劃

Tango 實景重建技術

Vector Graphics and Texturing

Ultra-high-definition displays are available on the market, and vector graphics are possible solutions to describe various multimedia contents in versatile applications because of its compactness and scalability. Therefore, this project focuses on proposing a new data structure and vector algorithm to preserve complex details of arbitrary images and maintain interactive editing and manipulation abilities. Furthermore, high resolution textures are determinant for high rendering quality in gaming and movie industries, but burdens in memory usage, data transmission bandwidth, and rendering efficiency. Therefore, it is desirable to shade 3D objects with vector images such as Scalable Vector Graphics (SVG) for compactness and resolution independence. We also focus on applying the vector image on rendering 3D objects.

Vector Graphics and Texturing

Ultra-high-definition displays are available on the market, and vector graphics are possible solutions to describe various multimedia contents in versatile applications because of its compactness and scalability. Therefore, this project focuses on proposing a new data structure and vector algorithm to preserve complex details of arbitrary images and maintain interactive editing and manipulation abilities. Furthermore, high resolution textures are determinant for high rendering quality in gaming and movie industries, but burdens in memory usage, data transmission bandwidth, and rendering efficiency. Therefore, it is desirable to shade 3D objects with vector images such as Scalable Vector Graphics (SVG) for compactness and resolution independence. We also focus on applying the vector image on rendering 3D objects.